The artificial intelligence industry reached a significant turning point on January 5, 2026, when NVIDIA CEO Jensen Huang announced at CES that the NVIDIA “Vera Rubin” Platform has officially begun full production.

After the renowned astronomer Vera Rubin, who provided the first evidence for dark matter, this architecture aims to illuminate some of the most challenging issues in AI: efficiently scaling trillion-parameter models. As the successor to the record-setting Blackwell architecture, the Rubin platform goes beyond just being a faster chip; it completely transforms the AI production process. At its core is the H300 GPU, which includes HBM4 memory, the Vera CPU, and a networking system that treats the entire data center as a single computer.

- The H300 GPU: A 336-Billion Transistor Powerhouse

The centerpiece of the Rubin platform is the NVIDIA H300 GPU. Unlike Blackwell, which featured a dual-die design, Rubin elevates both monolithic and multi-chip module (MCM) designs using TSMC’s 3nm-class process (N3P).

The Architecture of the H300

The H300 is more than just a high transistor count; it’s about how those transistors are used.

- Massive Transistor Count: The H300 boasts 336 billion transistors, a significant increase from Blackwell’s 208 billion. This allows for many more Tensor Cores and CUDA cores.

- NVFP4 Precision: The third-generation Transformer Engine brings a new NVFP4 (4-bit floating point) format, achieving 50 Petaflops of inference performance—a remarkable 5x improvement over Blackwell. This precision is crucial for running large models like GPT-5 and Gemini 2.0 with reduced memory requirements.

- Training Power: The H300 offers 35 Petaflops for training, making it 3.5 times faster than its predecessor when preparing the most advanced foundation models.

- Radical Memory Bandwidth: The HBM4 Revolution

Trillion-parameter models are often limited by memory, as they need vast amounts of data to flow in and out of the GPU quickly. The H300 overcomes this with HBM4 (High Bandwidth Memory 4).

Breaking the Memory Wall

- 22 TB/s Bandwidth: This marks a 2.8x increase from Blackwell and ensures that the GPU’s significant compute cores never lack data, even when handling large batch sizes.

- 288GB Capacity per GPU: With 8 to 12 stacks of HBM4, a single H300 GPU can manage massive model weights directly. This reduces the need for constant off-chip communication, which is a primary source of latency in modern AI clusters.

- Advanced Packaging: By using CoWoS-L (Chip on Wafer on Substrate), NVIDIA has placed these memory stacks closer to the logic dies, cutting power usage per bit transferred by 30%.Read more…..

- The NVIDIA Vera CPU: Built for Agentic AI

For the first time, NVIDIA has designed a CPU specifically for managing the complex logic needed for Agentic AI and reasoning. The Vera CPU replaces the Grace CPU in the high-end Superchip configurations.

The “Olympus” Core Architecture

The Vera CPU includes 88 custom “Olympus” cores. Unlike general-purpose server CPUs, these cores are crafted for:

- High-Speed Data Shuffling: Efficiently moving data between the GPU, memory, and network.

- Spatial Multithreading: Vera employs physical resource partitioning to run 176 hardware threads, ensuring immediate execution for the complex logic often required in AI agents that make decisions and call external APIs.

- 1.2 TB/s Memory Bandwidth: Paired with LPDDR5X, it delivers 2.4 times the bandwidth of Grace, removing the CPU-related bottleneck in the AI process.

NVIDIA Vera Rubin Platform :

- Networking: NVLink 6 and the NVL144 Rack

To scale to trillion parameters, thousands of GPUs must work together seamlessly. NVIDIA has revamped its interconnect and networking systems to handle this “AI Supercomputer” scale.

NVLink 6 Switch

The new NVLink 6 offers 3.6 TB/s of bidirectional bandwidth per GPU. The overall rack-level vertical bandwidth has reached an impressive 260 TB/s, allowing GPUs in different parts of the rack to share data as if they were on the same chip.

Vera Rubin NVL144

The flagship system is the NVL144 rack-scale setup.

- 144 GPUs per Rack: This doubles the density of the previous NVL72.

- 3.6 Exaflops of AI Power: A single rack can perform tasks that previously required an entire data center.

- Full Liquid Cooling: Due to the considerable density (120kW+ per rack), the NVL144 is entirely liquid-cooled. It uses a special warm-water cooling loop that removes the need for energy-consuming chillers.

- The Engineering Challenge: Liquid Immersion and Power

As performance increases, so does power consumption. The Rubin platform tackles the thermal challenge with innovative engineering.

Advanced Liquid Cooling

The H300 GPU has a Thermal Design Power (TDP) that can exceed 1,000 Watts. To address this, NVIDIA has partnered with companies like Vertiv and Schneider Electric to create:

- Direct-to-Chip Liquid Cooling: Micro-channels in the GPU cold plate help dissipate heat instantly.

- Immersion Readiness: The Rubin architecture is suitable for “single-phase immersion,” where the entire server blade is submerged in a non-conductive coolant, achieving a Power Usage Effectiveness (PUE) of 1.02, making it the most energy-efficient AI platform ever created.

- The Software Stack: CUDA 13 and Beyond

A great chip needs software to function effectively. Alongside the Rubin platform, NVIDIA introduced CUDA 13.

- Native FP4 Support: Libraries such as cuDNN and TensorRT have been rebuilt to use the NVFP4 precision format automatically.

- AI Agent Blueprints: New software templates enable developers to deploy “agentic” workflows on the Vera CPU, using the 176 threads for quick reasoning.

- Confidential Computing: Rubin provides hardware-level encryption for data in use, ensuring that sensitive information stays secure, even when training foundation models.

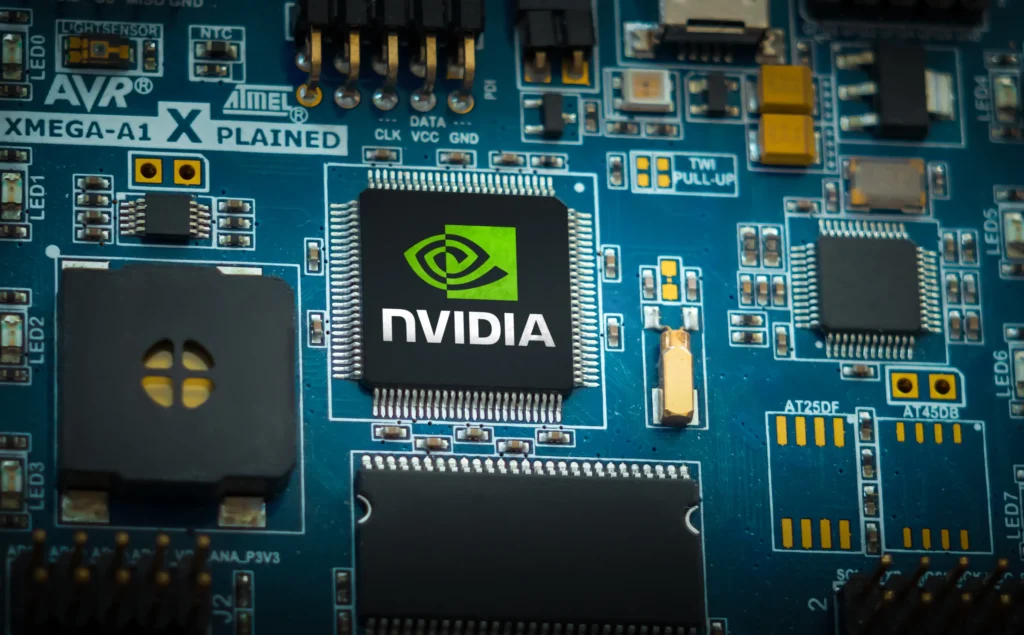

- Comparison: Blackwell (B200) vs. Vera Rubin (H300)

| Feature | Blackwell (B200) | Vera Rubin (H300) | Improvement |

|---|---|---|---|

| Transistors | 208 Billion | 336 Billion | ~1.6x Increase |

| Inference (FP4) | 10 PFLOPS | 50 PFLOPS | 5x Faster |

| Training (FP8) | 10 PFLOPS | 35 PFLOPS | 3.5x Faster |

| Memory Tech | HBM3e | HBM4 | Generational Leap |

| Memory Bandwidth | 8 TB/s | 22 TB/s | 2.8x Higher |

| Interconnect | NVLink 5 (1.8 TB/s) | NVLink 6 (3.6 TB/s) | 2x Bandwidth |

| CPU Architecture | Grace (Neoverse) | Vera (Custom Olympus) | Dedicated AI Logic |

- Industry Impact: 10x Lower Token Costs

NVIDIA is not only making faster chips; it is also making AI more affordable. Jensen Huang stated that the Vera Rubin platform cuts inference token costs by up to 10 times.

Who Benefits?

- Healthcare: Trillion-parameter models can now simulate entire biological systems, speeding up drug discovery from years to weeks.

- Climate Science: The outstanding 22 TB/s bandwidth allows for precise weather simulations that can predict extreme events with 99% accuracy.

- Robotics: The Vera CPU’s “Agentic” abilities enable robots to process real-world data in real-time, evolving from programmed movements to actual reasoning.

- The Economics of the AI Factory

Building a Rubin-based AI factory is a billion-dollar venture, but the return on investment is remarkable. By requiring four times fewer GPUs to train a Mixture-of-Experts (MoE) model compared to Blackwell, organizations can save billions in energy and infrastructure over three years. This makes deploying trillion-parameter models financially feasible for mainstream companies, not just for the major tech giants.

Final Thoughts: Full Production and Global Rollout

The NVIDIA Vera Rubin Platform is now in full production. Volume shipments are expected to reach partners like Microsoft, AWS, Google Cloud, Meta, and Tesla in the second half of 2026.