In the early 2020s, Large Language Models (LLMs) captivated the world. These models, often called the “brains in a box,” could write poetry and code. However, by 2026, the story shifted from screens to streets. We entered the era of Embodied AI in Industry. Artificial intelligence was no longer just a digital advisor but a physical agent that could navigate, manipulate, and master the three-dimensional world.

From BMW’s assembly lines, where cars autonomously take shape, to Amazon’s massive robotic fleet that manages global commerce, the “Ghost in the Machine” has gained a physical presence. This deep dive explores the technology behind it, the industrial leaders driving the change, and the significant challenges that remain before we achieve a fully autonomous future.

I. The Anatomy of Embodied AI: Building a Physical Mind

To grasp Embodied AI, we first need to differentiate it from “Classic Robotics.” A traditional industrial robot is like a puppet—it follows a strict script. If a human places a box two inches out of its programmed path, the robot will attempt to grasp at nothing.

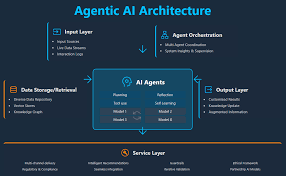

Embodied AI is different; it is Agentic. It senses its environment, reasons about changes, and performs actions in a feedback loop. This progress relies on four “Organs of Intelligence”:

- The Vision-Language-Action (VLA) Model

The VLA model emerged in 2025 and 2026. Systems like NVIDIA’s GR00T, Google’s Gemini Robotics 1.5, and Physical Intelligence’s π0 allow robots to interpret the world through one lens.

- Vision: The model processes 3D Lidar and RGB-D camera feeds to create a “point cloud” of its surroundings.

- Language: It can understand complex, non-linear commands such as “Find the blue crate, check if it’s empty, and then move it to the loading dock.”

Before VLA models, coding each step separately was necessary. Now, a single neural network manages the entire process, enabling robots to show “common sense,” like pushing an obstacle aside to achieve a goal.

- The Sim-to-Real Pipeline (The Matrix for Robots)

Training a robot in the real world can be costly; a single crash may amount to $100,000. To address this, companies employ Digital Twins. Using platforms like NVIDIA Omniverse, robots are trained in high-fidelity physics simulations. In these “robotic dreamscapes,” a robot can practice a task 100,000 times in just one afternoon. When the AI is transferred into a physical robot, it already has the muscle memory to deal with gravity, friction, and inertia. - Edge Computing (Thinking Locally)

Latency hampers Embodied AI. If a robot must send a video frame to a cloud server elsewhere to ask, “Is that a human in my way?”, it will crash before receiving an answer. By 2026, robots are equipped with specialized “Inference Chips,” such as Amazon’s Inferentia or Tesla’s FSD Hardware, allowing them to process information locally in under 10 milliseconds. - Multimodal Feedback (The Sense of Touch)

Modern robots don’t just see; they also feel. Tactile sensors in their fingertips enable AI to differentiate the pressure needed to pick up a light bulb versus a lead pipe. This “haptic intelligence” finally enables robots to move beyond the heavy metal world of car frames into the delicate realm of retail and logistics.

II. The Industrial Vanguard: BMW and the Software-Defined Factory

BMW has emerged as the leading example of the “Software-Defined Factory.” The iFactory initiative in Spartanburg and Regensburg is now more than just a place for assembling cars; it functions as a giant, embodied computer.

The “Self-Driving” Production Line

BMW’s Automated Driving In-Plant (AFW) has removed one of the oldest inefficiencies in car manufacturing. Traditionally, humans had to drive finished cars to shipping lots, requiring shuttle buses to return drivers.

The AI Solution: With external Lidar sensors mounted on factory walls, the cars’ internal computers are controlled by the factory’s central AI.

The Result: The cars navigate the factory independently, weaving around workers and arriving at the correct shipping bay. The factory itself takes on the role of “driver.”

Humanoid Integration: Figure 02 and 03

In 2025, BMW completed a significant pilot using Figure 02 humanoid robots. These bots loaded sheet metal parts into welding fixtures, a job requiring precision within 5 millimeters. By early 2026, data indicated a 99% success rate over more than 1,000 hours. BMW is now introducing Figure 03, featuring over 20 degrees of freedom in the hands, enabling it to manage small screws and cables that were previously reserved for humans.

Embodied AI in Industry :

III. The Logistic Symphony: Amazon’s Million-Robot Fleet

Amazon’s implementation of Embodied AI is impressive in scale. By 2026, they operate over one million robots—a population greater than many mid-sized cities.

DeepFleet: The Orchestrator

The main challenge in a warehouse isn’t just moving boxes; it’s preventing “robotic gridlock.” If 500 robots try to enter the same aisle, the system can break down. Amazon’s DeepFleet AI functions as a “Strategic Nervous System.” It uses predictive modeling to foresee bottlenecks 15 minutes ahead of time, adjusting robots’ paths dynamically. This has boosted warehouse efficiency by 10%, a significant improvement when handling billions of packages.

The “Sparrow” and the “Proteus”

Sparrow: This robotic arm uses suction and AI-driven vision to identify and pick up over 65% of Amazon’s inventory, which includes millions of unique items. It can differentiate between soft t-shirts and rigid books, adjusting its grip in real-time.

Proteus: Amazon’s first fully autonomous mobile robot does not need fencing. It relies on a “safety bubble” of sensors to navigate safely around human workers, enabling a collaborative environment without barriers.

IV. The Turning Point: Why 2026 is Different

For decades, robotics showcased impressive demos that often failed in real-world settings. What has changed?

The Cost Collapse: In 2022, a capable humanoid robot cost around $250,000. By 2026, that price has fallen to $20,000 to $50,000 for industrial units. When a robot’s price nears a human worker’s annual salary, the economic “tipping point” is reached.

The Rise of Humanoids: 2026 is dubbed the “Year of Delivery.” Tesla’s Optimus Gen 2, 1X’s NEO, and Boston Dynamics’ Electric Atlas have progressed from laboratory prototypes to trials on factory floors. Unlike specialized robots, these humanoids can use human tools and navigate stairs, making them easily adaptable for existing setups.

Standardization: The industry has shifted away from proprietary “black boxes.” Open-source frameworks like ROS 2 (Robot Operating System) and collaborative data projects like Open X-Embodiment have established a common language for robots. An AI model trained on a robotic arm in Japan can function on a humanoid in Germany.

V. The Critical Challenges: The “Wall” of Atoms

Despite impressive advancements, Embodied AI still faces three significant obstacles that keep engineers awake at night.

- The Moravec Paradox

High-level reasoning, like winning in Go or passing the Bar Exam, is straightforward for AI. In contrast, low-level sensorimotor skills, such as navigating uneven grass or folding a fitted sheet, are incredibly challenging. We have AI capable of writing a physics thesis but unable to pick up a slippery grape without crushing it. - The Power Density Gap

An LLM operating in the cloud enjoys a constant electricity supply. An embodied robot must carry its energy with it. Current battery technology limits most high-performance humanoids to 2 to 4 hours of intensive work. Until we develop breakthroughs in solid-state batteries or extreme energy efficiency, these robots will require frequent “coffee breaks” at charging stations. - The Data Desert

LLMs trained on the entire internet, which includes trillions of words, have an advantage. However, there is no “internet of physical actions.” To teach a robot how to open a door, thousands of videos showing doors being opened from the robot’s perspective are necessary, combined with precise motor data. This “Action Data” is the most valuable resource in the world in 2026, creating competition as companies practically “mine” real-world movements to enhance their models.

In logistics, humans manage exceptions—the 5% of complex tasks the AI can’t handle—while robots deal with 95% of the volume.

In trade, nations like India leverage their large IT workforce to act as “Remote Operators” for these global robot fleets, providing teleoperation support when an AI encounters difficulties.

The success of BMW and Amazon is not just about efficiency; it serves as a proof of concept for a new kind of civilization. A civilization where intelligence is no longer confined to a desk but is mobile, tangible, and ready to take on the physical demands of humanity. As we approach 2030, the pressing question is no longer if robots will become part of our daily lives, but how we will reshape our world to welcome our new, silicon-based companions.

Key Takeaways for 2026

Agentic AI is Core: Robots now plan and reason; they don’t merely follow scripts.

Humanoids are Commercial: 2026 marks the first mass delivery of general-purpose humanoid robots (Tesla, 1X, Figure).

The Battery Hurdle: Energy density remains the chief barrier for 24/7 autonomous operations. Read more..