The year 2026 marks a significant turning point in personal computing. For almost 20 years, the “Screen-Down” era dominated our lives. Smart Glasses 2026 This was a time defined by the blue light of smartphones and the isolation that came from focusing on a screen instead of the real world. Today, we have officially entered the “Eyes-Up” era. This shift is not just about a new gadget; it is about the evolution of smart glasses as the main way we interact with generative AI.

Smart glasses now combine advanced voice interfaces, real-time translation, and high-quality hands-free recording. They have turned from a niche novelty into a crucial tool for cognitive enhancement. This analysis explores the technological, social, and economic landscape of the smart glasses revolution in 2026.

- The Generative AI Voice Interface: Your Digital Sidekick

The standout feature of 2026 smart glasses is the Generative AI Voice Interface. Unlike the rigid assistants of the 2020s, the modern AI in your glasses is a conversational partner.

Multimodal Understanding

Today’s AI, powered by models like Gemini 3 and GPT-5, doesn’t just listen to your voice; it also “sees” through the glasses’ cameras. This capability provides incredible context. For example, if you are looking at a complex engine part and ask, “How do I loosen this?” the glasses analyze the visual input and respond, “Turn the silver bolt counter-clockwise using a 10mm wrench.”

Ambient Intelligence

We are now seeing Ambient Intelligence, where AI acts proactively instead of just reacting. By 2026, smart glasses can:

- Anticipate Needs: Remind you of a person’s name as they approach you at a conference, using facial recognition and synced LinkedIn data.

- Contextual Briefings: Give a summary of your next meeting’s agenda when you arrive at the boardroom.

- Whisper Navigation: Instead of consulting a map, you hear subtle directions as if they are coming from the street you need to turn onto.

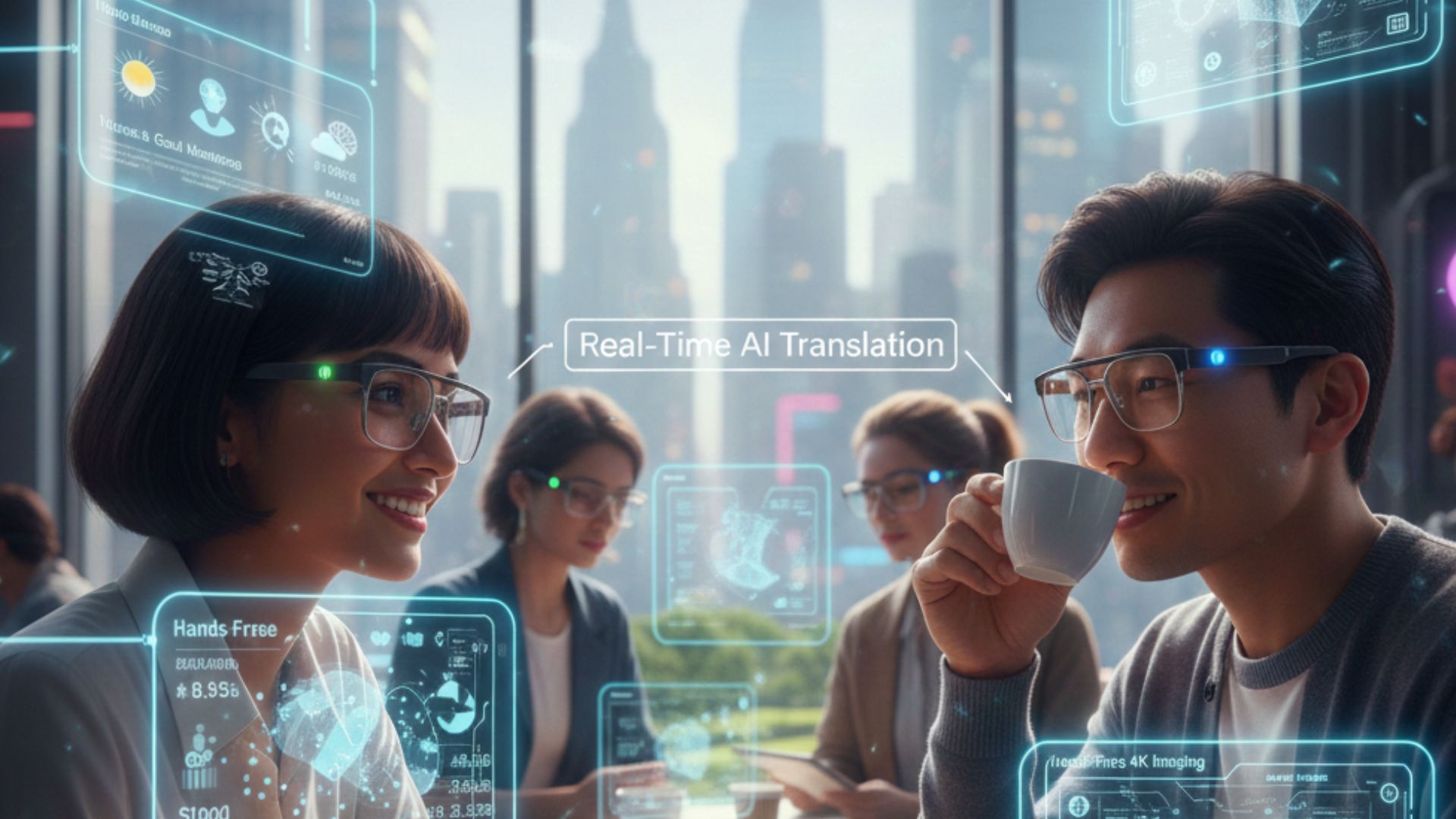

- Real-Time Translation: The End of Language Barriers

The most impactful application of 2026 smart glasses is Instant Real-Time Translation. This feature has changed international business, tourism, and diplomacy.

Auditory and Visual Synergy

Smart glasses use a dual approach to translation.

- Audio Captions: Bone-conduction speakers deliver a translated audio stream directly to your inner ear, preserving the original speaker’s tone and cadence.

- Visual Subtitles: For models with Micro-LED displays, like the 2026 Even Realities G2 or XREAL 1S, translated subtitles appear in your lower field of vision.

The Social Impact

In 2026, language barriers have diminished. A student studying abroad can attend a lecture in German while following along with English subtitles in real time. This technology has enabled a more global workforce, where remote collaboration thrives despite language differences.

- Hands-Free Recording: The First-Person POV Revolution

The move toward hands-free recording has changed the “Creator Economy” and many professional fields.

The Content Creator Perspective

Vlogging has shifted from “handheld” to “POV.” In 2026, the most popular content on social platforms is captured through smart glasses. This allows creators to show their audience what they see while keeping their hands free for other tasks, such as extreme sports, artisanal cooking, or travel. The Ray-Ban Meta Gen 3 and Oakley Vanguard models now support 4K first-person recording with advanced stabilization.

Professional and Emergency Use

- Healthcare: Surgeons record procedures from their viewpoint, creating valuable training material for students.

- First Responders: Firefighters and EMTs record their interventions hands-free, allowing for real-time guidance from senior professionals.

- Journalism: Independent journalists discreetly capture live events using smart glasses, ensuring their “lens of truth” is always active without bulky equipment.

- Hardware Breakthroughs: Why 2026 is Different

For years, the dream of smart glasses faced three main challenges: Battery, Heat, and Style. By 2026, these issues have largely been addressed.

Solid-State Batteries

The arrival of solid-state battery technology has increased the energy capacity of wearable devices. 2026 smart glasses can now last 14 to 16 hours of continuous use, even with AI and cameras in operation.

Waveguide Optics and Micro-LEDs

Display technology has evolved from bulky prisms to more streamlined waveguides. This allows lenses that resemble regular prescription glasses while projecting a bright, full-color 1080p overlay. For instance, the Asus ROG Xreal R1 features a 240Hz refresh rate, a specification once reserved for high-end gaming monitors, now packed into a 90-gram frame.

The Fashion Factor

In 2026, the “Cyborg look” is out. Collaborations between tech firms and luxury brands like Kering and EssilorLuxottica have made smart glasses indistinguishable from high-end fashion eyewear. People wear them for their appearance, not just their functionality.

- Economic and Market Dynamics

The smart glasses market has surged in 2026, with global shipments projected to reach 20 million units this year, four times the amount from 2025.

The Convergence of Big Tech

Apple: With the anticipated launch of “Apple Glass” in 2027, the current competition involves Meta, Samsung, and Google.

Android XR: Google’s new open platform, Android XR, enables smaller companies like XREAL and Viture to compete with hardware giants by providing a unified, high-quality software ecosystem.

Enterprise Dominance: More than 50% of Fortune 1000 manufacturers have invested in AR/AI wearable systems, reporting a 40% reduction in repair times.

Smart Glasses 2026

- Ethical Considerations and the Privacy Debate

The rise of smart glasses has sparked significant concerns about privacy and social norms.

Privacy by Design

To address the “Secret Recording” stigma, 2026 models incorporate built-in privacy features:

- Active LEDs: Recording lights connected to the camera sensor; if the LED is blocked, the sensor ceases to operate.

- Anonymization: On-device AI can automatically “blur” faces of bystanders in recorded footage before it is uploaded to cloud services.

Social Friction

Despite the technology, some places still impose restrictions. Certain restaurants and gyms have “No Glass” zones, echoing smartphone bans from the early 2010s. The industry is developing “Privacy-First” models—glasses that offer AI assistance and displays but lack a camera, designed for sensitive environments.

- The Human Impact: Education, Health, and Accessibility

Beyond the technology, the real story of 2026 is the human impact.

Revolutionizing Accessibility

For visually impaired individuals, smart glasses are transformative. Models like the Envision G3 use AI to verbally describe the surroundings, identify currency, read labels, and navigate complex spaces. These glasses provide a “second pair of eyes,” granting independence to millions.

Immersive Learning

Education has shifted from textbooks to interactive overlays. A biology student can look at a flower and see a labeled 3D diagram of its internal structure floating in the air. Research indicates that AR-guided learning in 2026 boosts knowledge retention by 70% compared to traditional methods.

- The Future: Towards 2030

As we approach the end of the decade, the next innovation in smart glasses is Neural Input. Prototypes are being tested that use EMG sensors in the frames or a wristband to detect subtle muscle movements, allowing users to “click” and “scroll” through menus simply by imagining finger movements.

Final Verdict: The Year the World Changed

2026 is the year smart glasses transitioned from being “the future” to “the present.” With generative AI voice interfaces, breaking down language barriers through real-time translation, and enabling a new age of hands-free recording, these devices have become our most personal computers. We are no longer just looking at the world and our devices; we are experiencing the world through our devices.

The “Eyes-Up” revolution is here, and it is clearer than ever.Read more…